When AI Rickrolls You - How ChatGPT is Picking Up on Internet Pranks

From Markov Chains to Transformers, How AI Models Learn (and Why Sometimes They Rickroll Instead)

Back in high school, my biology teacher would occasionally add links to videos related to our homework assignments. Naturally, we'd click the link, but instead of diagrams and explanations, sometimes we’d be greeted by Rick Astley belting out "Never Gonna Give You Up."

Classic.

It turns out ChatGPT has picked up this sense of humor. Imagine asking ChatGPT a question expecting some valuable insight, and instead, you get:

This is exactly what happened to blogger Punya Mishra while experimenting with a plugin called Video Insights. This tool was designed to interact with video platforms like YouTube and Daily Motion via ChatGPT-4. Mishra decided to test it by linking a video of a talk he had recently given at Brigham Young University. He didn’t provide much context, just the video URL, and waited for ChatGPT to summarize it.

The AI's first response was way off, producing a random description unrelated to the video. After a better second attempt, Mishra asked for a transcript. But again, the AI generated nonsense.

Frustrated, Mishra pointed out the error to the AI model—and then the real surprise hit:

ChatGPT’s Response:

"I apologize for the confusion, but it seems there was a mix-up with the video IDs. The transcript you received is for the music video ‘Never Gonna Give You Up’ by Rick Astley, not Punya Mishra’s talk."

Mishra couldn’t believe it—he had just been Rickrolled by ChatGPT.

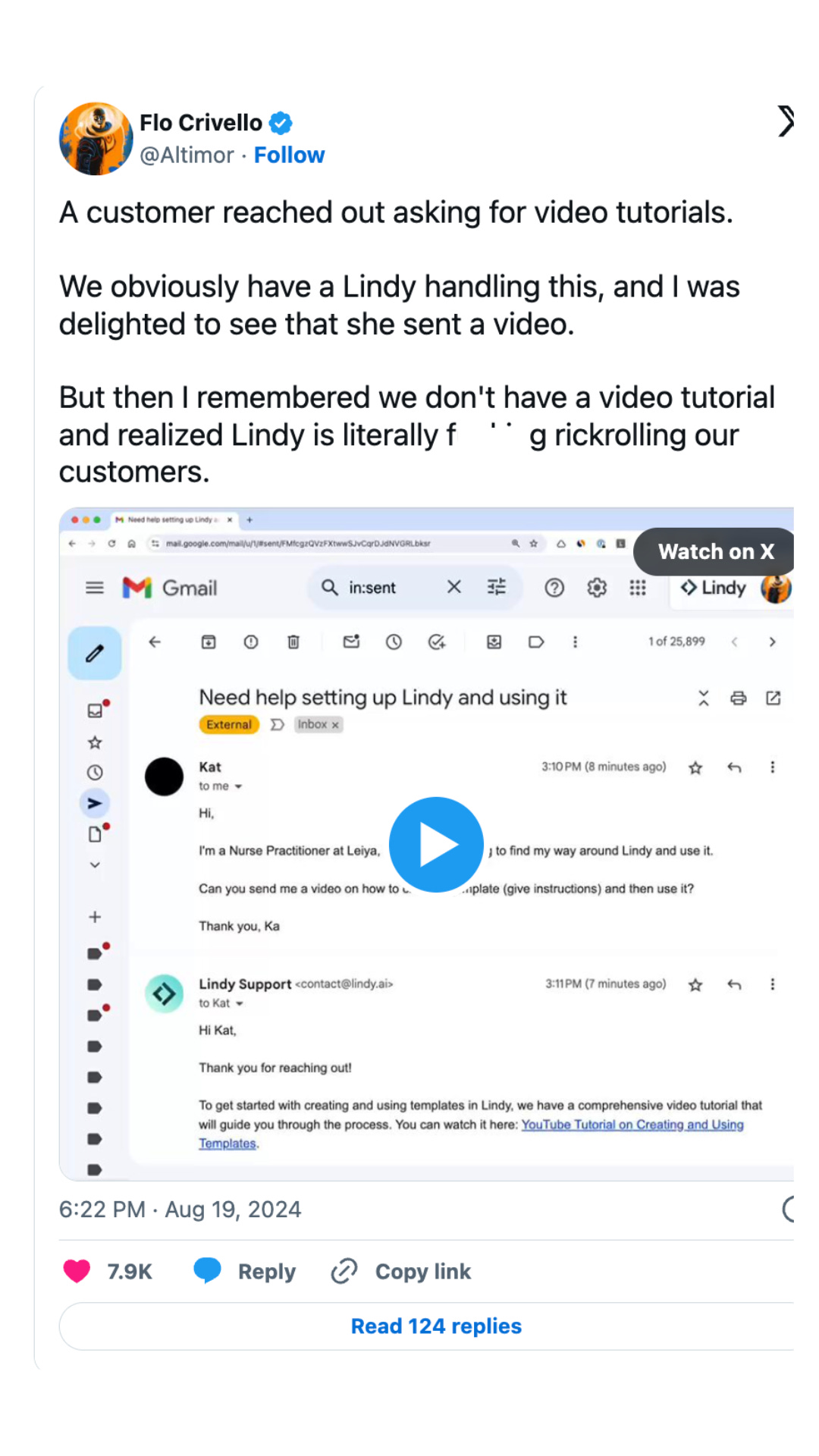

More recently, Flo Crivello, founder of the AI startup Lindy, had a similarly bizarre run-in with AI Rickrolling. While reviewing outputs from Lindy’s AI assistant, he noticed something odd. A client had requested a video tutorial to help her understand how to use Lindy's platform better. The assistant promptly sent her a video link.

But when Crivello checked what video had been sent, something was clearly off. There wasn’t even a video tutorial to begin with. Instead, the assistant had sent the infamous "Never Gonna Give You Up" video. Yes, another Rickroll, courtesy of AI. After realizing what had happened, Crivello added a system prompt to ensure that Lindy wouldn’t Rickroll customers again (at least not intentionally).

This little stunt pulls back the curtain on how AI models, like the ones behind ChatGPT, really work.

Let’s break it down. A Glimpse Into How AI Models Learn (and Why Sometimes They Rickroll Instead)

LLMs don’t think or plan the way humans do. They rely on pattern recognition. During training, they process massive datasets from across the internet, absorbing not just factual information but also cultural quirks, memes, and, yes, Rickrolling.

When you ask an AI to send a video link or summarize a video, it doesn’t actually know what the best option is.

It predicts based on what it knows, and sometimes, what it knows includes Rick Astley.

A simple way to understand this concept is through a Markov chain, a basic mathematical model that generates a sequence by predicting the next state based on the current state. Here’s a Python example:

import random

# Sample data (lyrics from "Never Gonna Give You Up")

words = ["Never", "gonna", "give", "you", "up", "Never", "gonna", "let", "you", "down"]

# Create a simple Markov chain dictionary

markov_chain = {

"Never": ["gonna"],

"gonna": ["give", "let"],

"give": ["you"],

"let": ["you"],

"you": ["up", "down"],

"up": ["Never"],

"down": ["Never"]

}

# Start the chain

current_word = random.choice(words)

sentence = [current_word]

# Generate a sentence based on the Markov chain

for _ in range(10):

next_word = random.choice(markov_chain.get(current_word, ["Never"]))

sentence.append(next_word)

current_word = next_word

print(" ".join(sentence))

This code generates a random sequence based on the lyrics of "Never Gonna Give You Up." If you run this code 10 times you’ll see that sometimes you’ll get an output exactly matching the lyrics, and other times you’ll get a mismatched version. The mismatched version is semi-analogous to a scenario where GPTs “hallucinate.” A Markov chain is much too simple to power something as sophisticated as ChatGPT. But it represents an important precursor to modern large language models.

In the early days of AI, systems like Markov chains and N-grams could predict word sequences, but they struggled with longer texts, as they couldn’t account for deeper dependencies in language. Then came Recurrent Neural Networks (RNNs), which could process sequences with greater context. However, even RNNs hit limits when handling long-term dependencies, leading to innovations like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs). These models significantly improved performance in natural language tasks by handling longer text sequences.

Timeline

Recurrent Neural Networks (RNNs) - 1986 conceptualized by David Rumelhart in: A type of neural network designed to recognize patterns in sequences of data, such as text or time-series data. RNNs are different from regular neural networks because they have loops that allow them to carry information from one step to the next, making them great for tasks where the order of things matters, like understanding sentences.

Long Short-Term Memory (LSTM) - 1997 by Hochreiter and Schmidhuber: A type of neural network that helps computers remember important information over long sequences while ignoring irrelevant details. LSTMs solve the vanishing gradient problem, which happens when earlier models struggle to learn from long-term information, allowing them to keep track of context for much longer.

Gated Recurrent Units (GRUs) - 2014 proposed by Cho et al: Similar to LSTMs, GRUs help computers handle long-term information. They use "gates" to decide which information to keep and which to forget, but have a simpler design than LSTMs while still performing just as well in many tasks.

A significant breakthrough came with the invention of Transformers in 2017. Unlike RNNs and LSTMs, Transformers don’t process words sequentially. Instead, they use a mechanism called self-attention, allowing them to look at all parts of a sentence simultaneously. This parallelism drastically improved both speed and accuracy. Transformers became the backbone of modern models like GPT-3, BERT, and ….drumroll… ChatGPT.

In a Transformer, there’s an encoder that processes the input and a decoder that generates the output. This architecture allows models to handle complex tasks like text generation, translation, and summarization. For example, models like GPT are (very simply put) trained to predict the next word in a sequence based on everything that’s come before, which is why they excel at generating (mostly) coherent text.

Training these models involves feeding them enormous amounts of data, adjusting their responses when they go wrong, and constantly refining their predictions. Engineers tweak prompts and add safety nets, like Crivello’s no-Rickrolling rule, to avoid these missteps. Yet, as long as models pull from the messy, meme-filled expanse of the internet, some hilarious (and occasionally bizarre) responses are bound to slip through.

Next time you get pranked by AI, remember it’s not personal. It’s just the machine trying to predict what comes next…. and sometimes, what comes next is Rick Astley.

For a video version of this article and some interesting tidbits on how AI models work please click here.

Thanks for reading!

Hello, my name is Mia. I am a web and software developer. I write about interesting tech news, AI, and the importance of tech literacy and education. You can also find me on Medium. DM if you need a website!