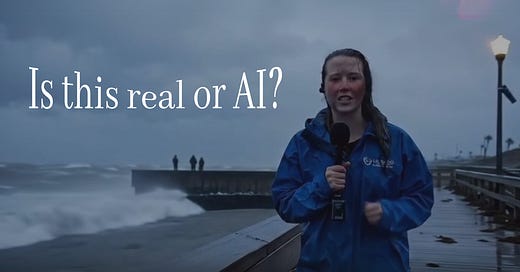

Some won't believe this video is fake. That's exactly the problem.

Google VEO 3 Just Changed Reality, And Most People Haven’t Noticed Yet

You’ve probably been seeing a lot of these AI videos on your feed:

clips that look better than anything you've seen from AI before. Gone are the odd transitions, six‑fingered hands, and eerie faces. Now the voices and facial expressions are becoming just human enough to bypass your brain’s natural uncanny valley detectors.

But why are these videos popping up everywhere all the sudden? The answer is: Google VEO 3.

What Is Google VEO 3

Unveiled on May 20, 2025, at Google I/O, Veo 3 is DeepMind’s most advanced generative video model yet, and it’s a powerhouse of audio-visual realism

Building on earlier versions, Veo 3 now natively generates dialogue, ambient audio, sound effects, and even music. The model also respects detailed object physics and outputs high-quality 1080p video, plus 4K previews via Vertex AI.

If you’ve got a Google AI account, you can try it yourself, though the amount of videos you can generate before you’re rate limited depends on your subscription tier. But even with the lowest tier and basic prompting skills the results are pretty jaw-dropping.

And those videos are now bleeding into our social feeds everywhere you look.

With realism this good, we're entering an era where video, a once very trusted medium, is becoming suspect. So how do we protect ourselves?

Alongside VEO 3, Google also dropped SynthID, a forensic tool that can scan any video and identify whether it was generated with a Google AI product.

Every video made by VEO 3 is invisibly watermarked with an embedded signature, detectable by SynthID.

So we’re safe, right?

Well, no. Let’s walk through where this starts to break down:

SynthID only detects Google-made content. If Meta, Adobe, or an indie developer makes a similar model without a watermark, SynthID won’t be able to detect it.

Watermarks can and will be stripped. The same way we’ve seen anti-detection tools for image generation, we will soon see tools designed to de‑watermark VEO 3 content.

I predict Meta and other major social platforms will soon roll out a SynthID-like detection system built right into their feeds. But who are we kidding? Detection only works until someone finds a workaround, and someone always does. Just like adblockers and anti-adblockers, this will become a cat-and-mouse game.

What happens when you can no longer distinguish between real and synthetic, and the only way to detect the difference becomes… undetectable? The stakes involve political propaganda, fake endorsements, and synthetic reality wars.

What VEO 3 Can’t Do (Yet)

As impressive as VEO 3’s outputs can be, it’s far from perfect.

Max length per generation: 8 seconds.

No coherent narrative stitching. Continuity between clips is janky. Same character, slightly different eye shape etc.

Micro Physics abnormalities. Look closely and you’ll catch objects that glide when they should fall. Hair that reacts like it's underwater. Micro-interactions that don’t compute quite right.

But these irregularities aren’t visible to everyone. In fact, most casual viewers won’t notice. The motion is just fluid enough. The eyes just real enough. The lip syncing just close enough…

Are Our Eyes About to Evolve?

We’ve been here before. Old CGI felt real, until it didn’t. Same with early Photoshop. At first glance it was believable, but years later laughably bad.

So maybe we’ll adapt again. Maybe our brains will start subconsciously picking up the oddities in AI‑generated physics or lighting. Or maybe we won’t. And how long until the tech advances and the micro-interactions are perfect, undetectable from real video.

The Philosophy of Fake

Here’s where this stops being a tech review and turns into an existential dilemma. We’ve built a society where video evidence equals truth. You “see it with your own eyes” and assume it happened. That assumption has been the cornerstone of journalism, justice systems, and personal relationships.

So what happens when your eyes lie?

Do we rewrite the rules of evidence? Do we build new norms for authenticity? Or do we sink into a kind of post-reality malaise where belief becomes tribal, and facts are whatever your favorite creator (or cult leader) says they are?

VEO 3 isn’t the end of the world. But it’s definitely the beginning of a very different one.

Right now, we still have guardrails, watermarks, and SynthID. But those are temporary. As soon as watermark-stripping tools go public, and competitors catch up without adding their own fingerprints, we’ll be staring at a feed full of lies we want to believe (or someone else needs us to believe).

And the scary part is these videos don’t even need to fool everyone, just the right someone.

You’re allowed to be amazed by what AI can generate. The tech is thrilling, brilliant, borderline miraculous. But also remember this: video evidence isn’t really evidence anymore. It’s just pixels arranged by an algorithm trying to guess what a believable world looks like.

And the most important tool you have now, is NOT SynthID. It’s your brain.

Before you panic or hit share on a video (or any content really), ask: Does this actually make sense? Then verify with MULTIPLE reputable sources. Believing your eyes is optional. Thinking critically isn’t.